The Solveit approach turns frustrating AI conversations into learning experiences

Disclosure: I took the Solveit course as a student. Later, after seeing what I built and shared in the Discord community, AnswerAI invited me to join their team.

"Here's a complete web app that does what you asked for!"

ChatGPT cheerfully responded, while presenting me with an intimidating wall of code. I had simply asked for help creating a small weather dashboard. At a first glance, the code looked fine. Imports, API calls, state management, and even error handling. But when I tried to run it, nothing worked. So of course, I paste the error message and ask for a fix.

"Oh, I see the issue, here's the corrected version..."

...And two new bugs appeared. Three responses later, the code was worse than when I started. And more importantly, I had no idea what was going on.

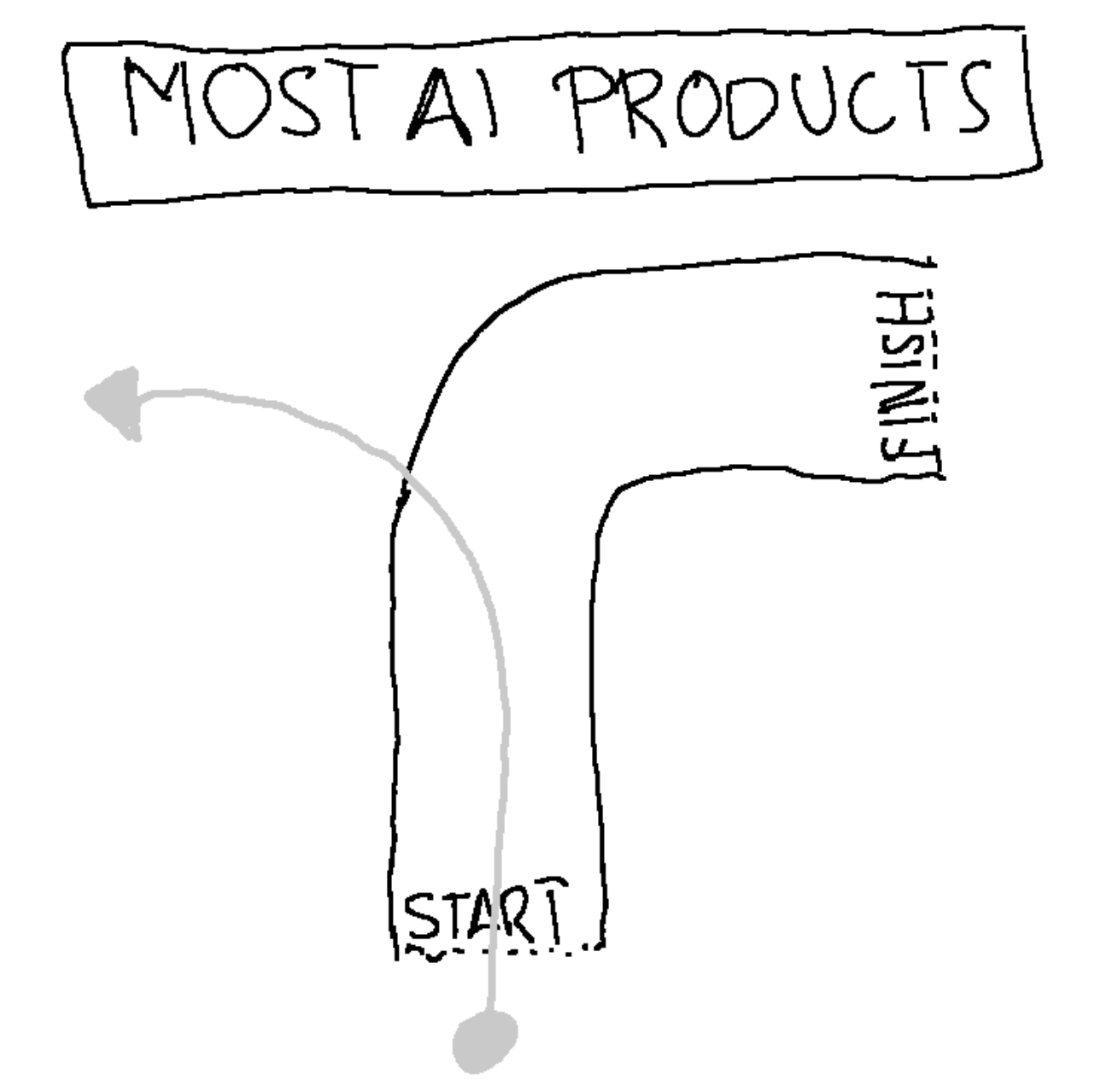

To me, most AI tools feel like using a self-driving car that all of a sudden decides to drive off a cliff.

This pattern, the "doom loop of deteriorating AI responses", is something I've encountered repeatedly. It's particularly frustrating because these AI tools seem so promising at first!

Enter Solveit, a tool designed specifically to transform these frustrations into learning experiences. In AnswerAI's "Solve It With Code" course, led by Jeremy Howard and Johno Whitaker, I learned not just how to use this tool, but the fundamental principles behind effective AI interaction that apply to working with any AI assistant.

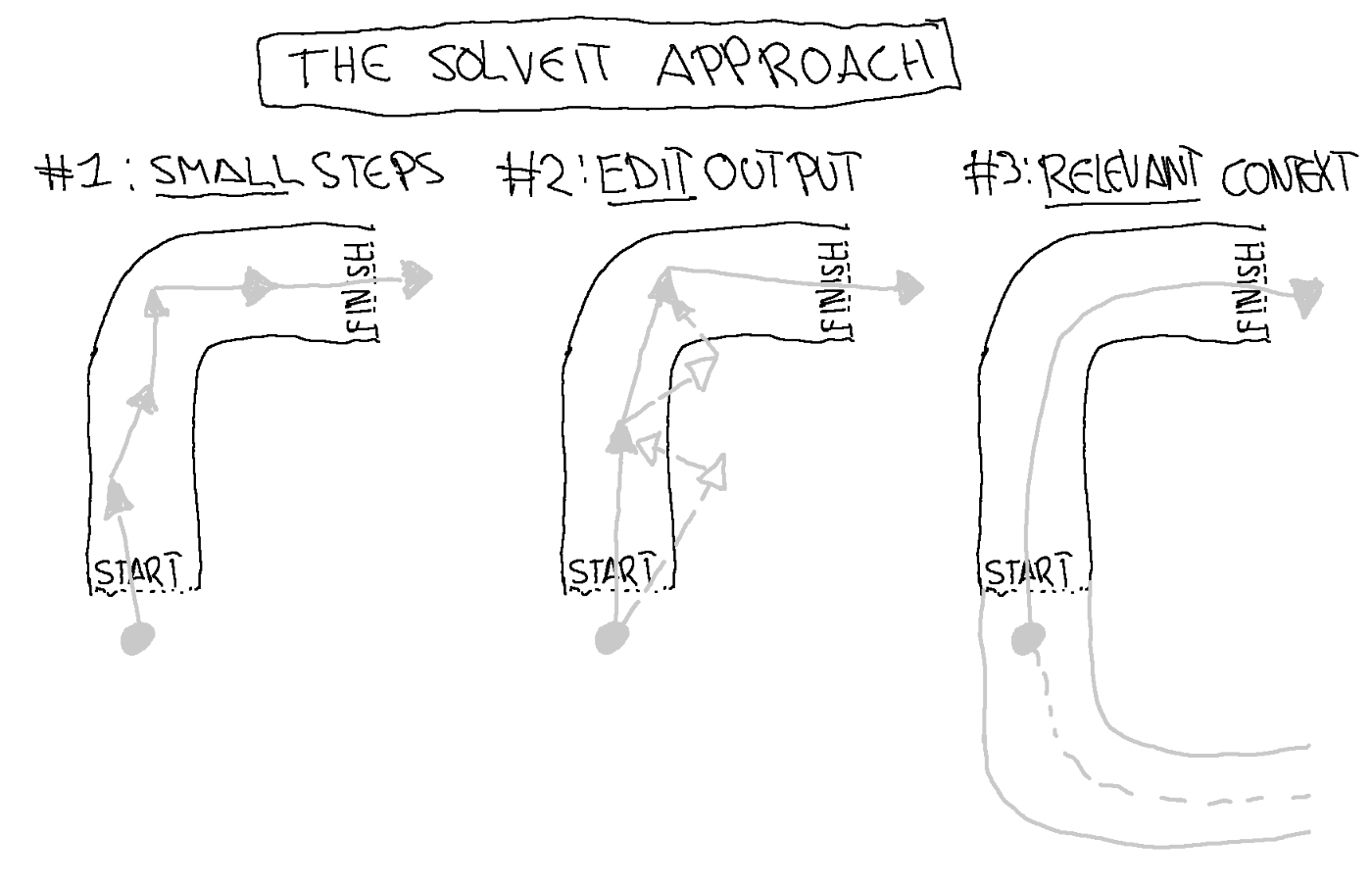

In this post, I'll share the three key properties of LLMs that cause the doom loop, and the three techniques that transform it into a learning loop. It's what they've come to call "the Solveit approach."

The TL;DR of this post can be summarized with this table:

| LLM Property | Consequence | Solve It Solution |

|---|---|---|

| RLHF | Over eager to give long complete responses | Work in small steps, ask clarifying questions, check intermediate outputs |

| Autoregression | Deterioration over time | Edit LLM responses, pre-fill responses, use examples |

| Flawed and outdated training data | Hallucinations and outdated information | Include relevant context |

Or if you're the visual type, with this beautiful illustration:

And while the course also introduced the "Solveit" tool, which is specifically designed to work with this approach, the principles apply to any AI interaction. Whether you're using ChatGPT, Claude Code, Github Copilot, Cursor, or any other AI tool.

So even if you don't have access to Solveit, I'm sure you'll find something valuable in this post.