Originally posted on twitter.

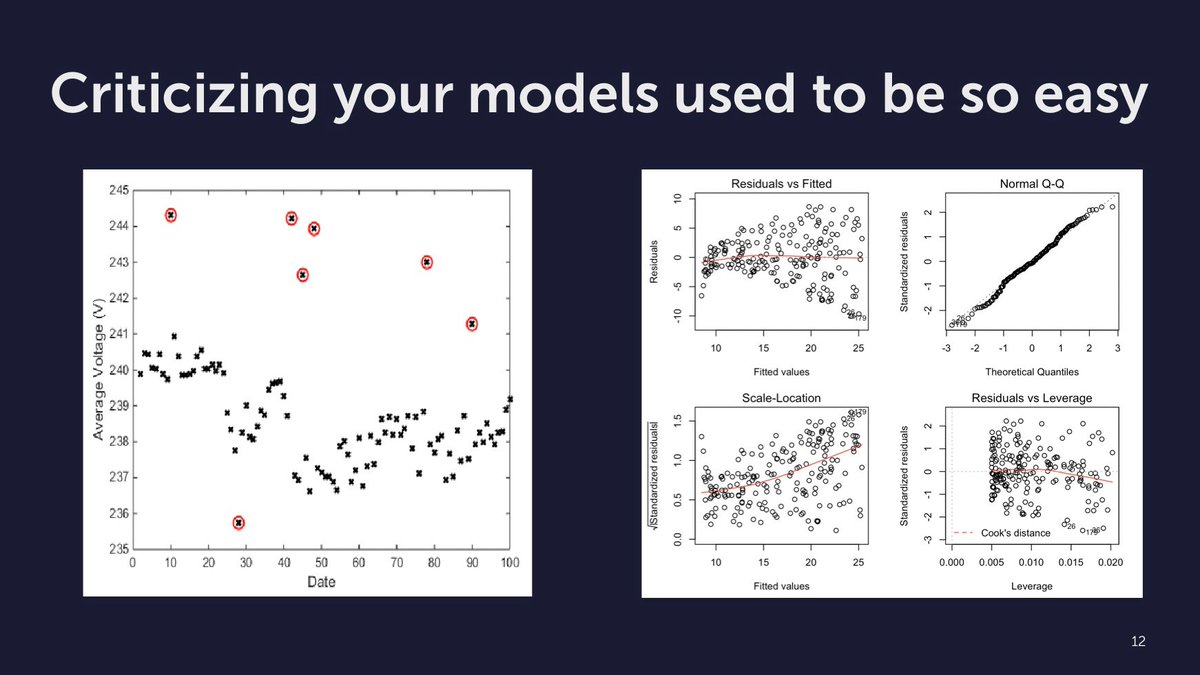

Criticizing your models is an important part of modeling.

In statistics this is well recognized. We check things like heteroskedasticity to avoid drawing the wrong conclusions.

What do you do in machine learning? Only check cross-validation score?

Embedding trick

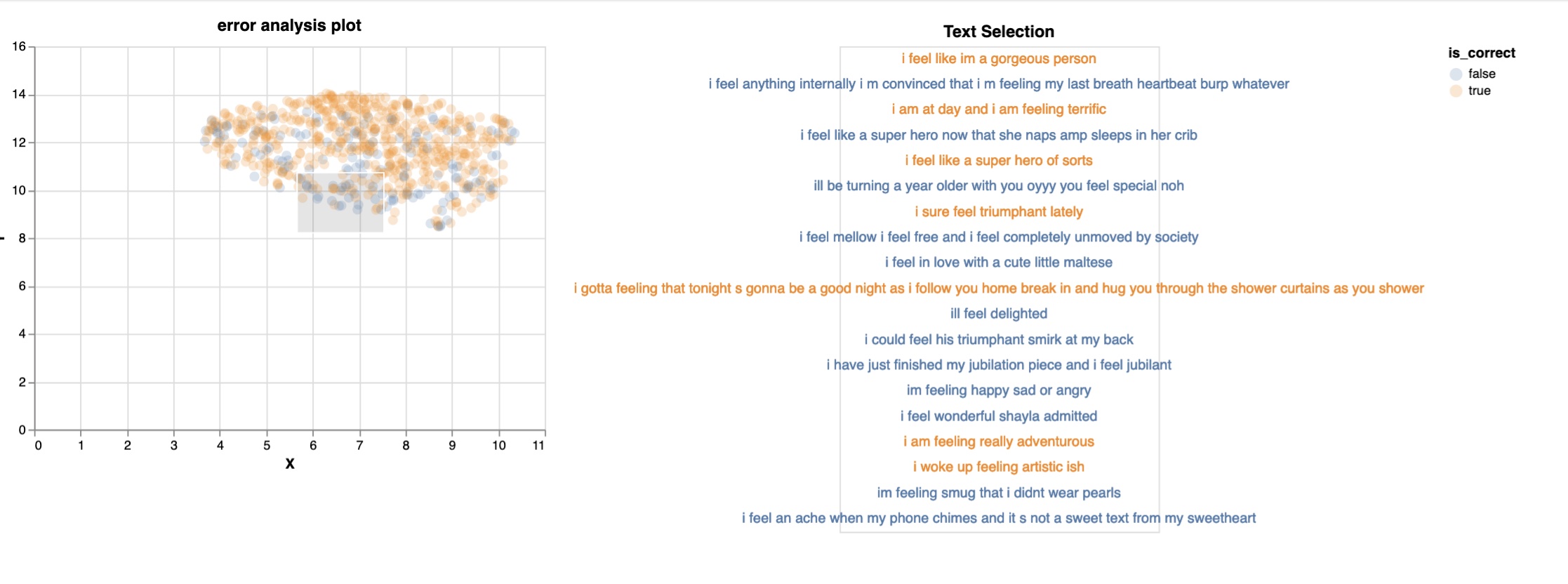

Here's one trick I apply in practice for error analysis in NLP:

- Embed the textual input with a sentence encoder

- Reduce to 2 dims with umap

- Scatter plot

- Add color to indicate if the model was right/wrong

- Find a cluster of mistakes

- Improve data or model

That trick helps me with debugging my data and my models. Using the whatlies package makes it easy to apply.

But that's just a trick...

I'm looking for a structured approach to criticize blackbox models and find predictable mistakes

Who has tips for this? 🙏